Nightmares of Our Own Creation

I have to admit something. When I wrote a few weeks ago about deleting my Facebook account, I did not mention that I had also created a completely blank burner account. As a person invested in and often researching communication technology, I feel it's important to keep a door open to see what's going on over there.

With unending genuflection to an authoritarian in the White House and general cowardice, Facebook has allowed droves and droves of garbage to flood its site. Since creating this blank account - no friends, no likes, just vibes - I have observed first hand some of the most bizarre and unsettling content I have ever seen.

AI generated non-consensual material of Billie Eilish, completely manufactured news stories with tens of thousands of likes, historical misinformation, and lots of skin. If you are a new user of Facebook and they aren't sure about you, they will feed you the most garish, sickening slop to keep you scrolling.

So today I wanted to share with you one of the things I've seen, why I think it exists, and what, if anything, we can do about it.

An anatomy of spam

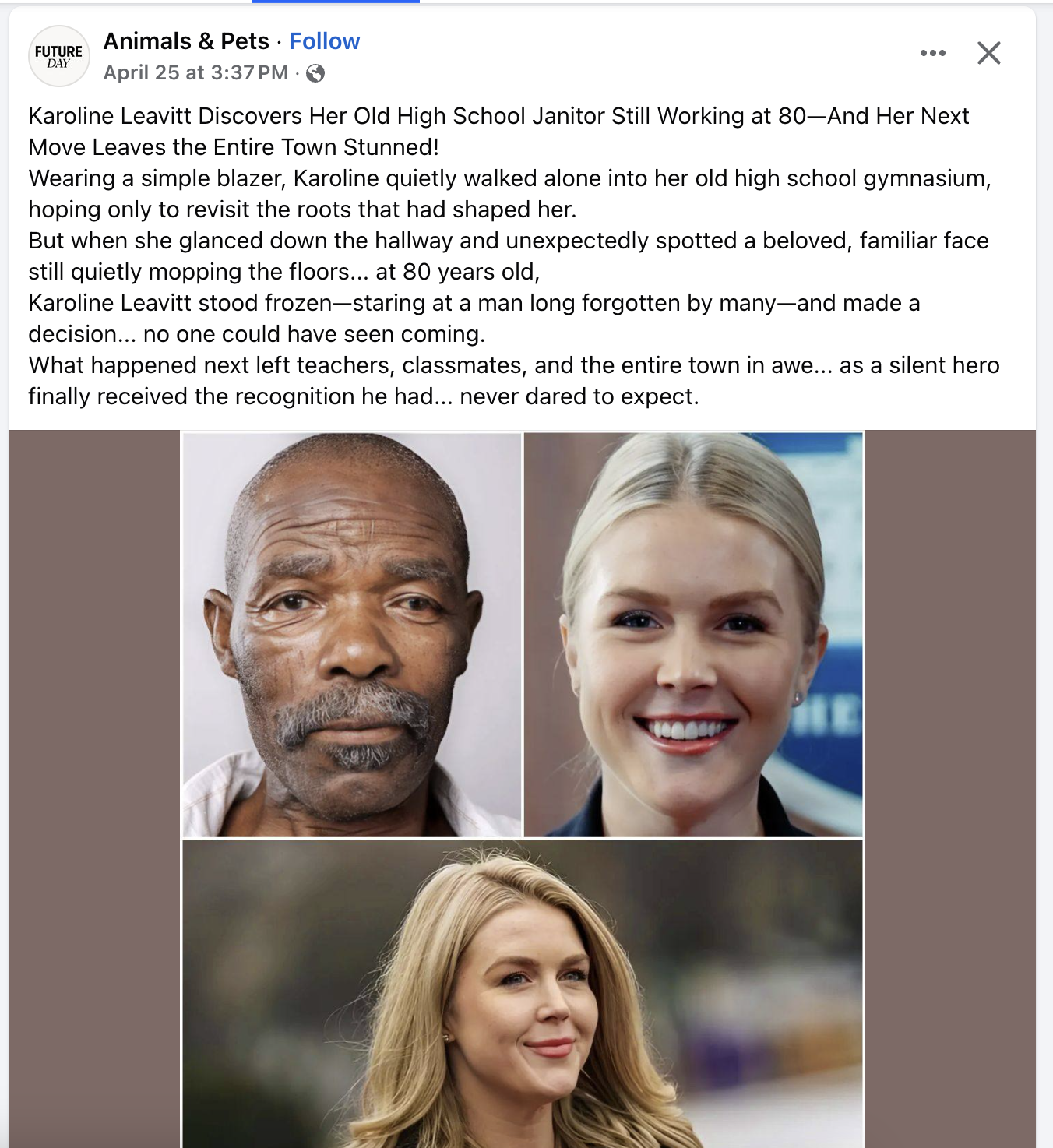

Hi, can I interest you in "Animals & Pets"? Our profile picture just says "FUTURE DAY" and we only post stories about Karoline Leavitt, Steph Curry, and Caitlin Clark.

So as you can see above, what we have here is a completely made up (likely AI generated) story that is attempting to valorize Karoline Leavitt, the current White House Press Secretary.

Now, why would this exist? Who would make this?

Just on the surface, when we see any media that attempts to characterize an otherwise controversial figure as noble we should be skeptical, even if its a puff piece about a horrible man in the New York Times. But this is obviously not the New York Times.

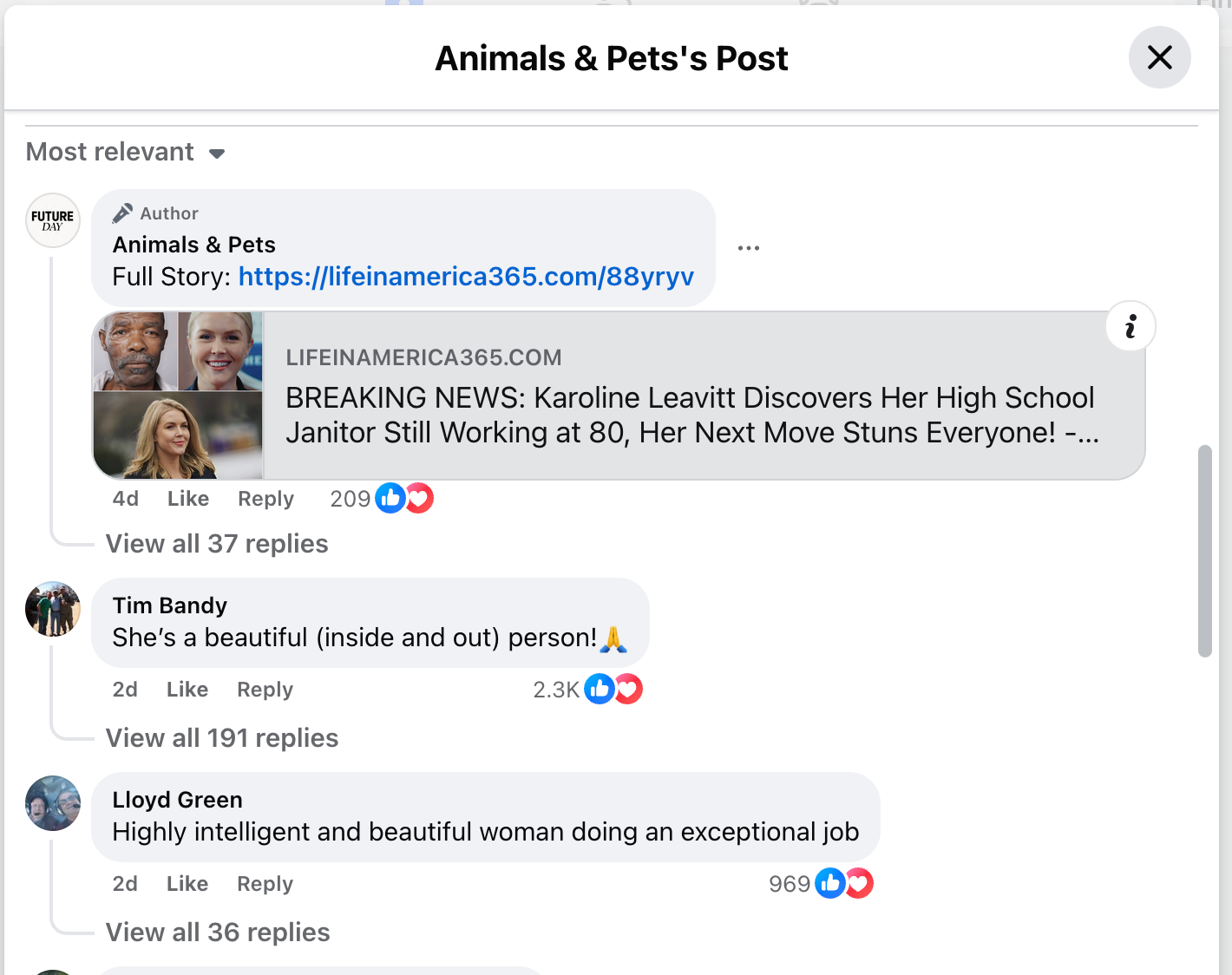

When we open the comments we can click through to the "article" that this post is coming from.

The link redirects you.

"viralstoryus.noithatnhaxinhbacgiang.com" is not a news source of which I am familiar.

This is deeply weird and unsettling, but under the surface lies incredibly simple mechanisms that allow spam and misinformation to flourish on sites like Facebook. Let's go down the pipeline here and see what we find.

Facebook is a product that literally relies on attention. Without a subscription model or insane VC funding, it relies on advertising to keep the lights on. This is not news, and many people can understand that Facebook's whole MO is to track your behavior, segment you into a marketable group, and then push material - both paid and unpaid - straight to your eyeballs.

Quick sidebar: What's the difference between "spam" and a "scam"? Spam is useless garbage that is mostly preying on your attention and the economies that thrive on it. A scam is maliciously targeted material designed to prey on your instincts in order to funnel money from you.

On the surface, what's happening here is spam, but it is definitely not innocuous.

What happens when you optimize for attention? Opportunists like whoever is running "viralstoryus" flood the system with incendiary, bias-confirming material that keeps people clicking, liking, scrolling, and watching.

But why?

Why go through all this trouble to generate all this, why take up the mantle of zombie Facebook pages to post this garbage? How could this be helping anyone? Here are some answers:

SEO scams

Everyday, Google web crawlers are tracking where people on the internet are going. They use this data to upscale or downscale results in their search interface. Companies and media outlets will often attempt to game this system in order to drive traffic to their sites. This is why you will often see entire "news" headlines that just read "What time do the Oscars start tonight?" Outlets know that a lot of people will search these exact words, and they want to appear high in the search results to get people to click on their site to get people to look at their ads to make money.

In this particular case, there are no ads on the homepage, so that mechanism likely isn't in effect here. But if you look carefully you can see the full URL of the site "viralstoryus.noithatnhaxinhbacgiang.com." Go to noithatnhaxinhbacgiang.com and you'll find a Vietnamese furniture store.

What's possibly happening here is that this Vietnamese furniture store wants to drive traffic to its site via Google searches and other external links. By having a fraudulent news page up that gets thousands of Facebook interactions per post (and thus a lot of clickthrough), the furniture store gets a boost in Google's rankings. Google's algorithms see spikes in traffic to this domain and assumes "Hm, I guess this is a site people want to see!"

Targeted misinformation

For years now, really since before the 2016 election, we know that foreign adversaries have seeded fake news and sown discord amongst Americans via Facebook. These efforts may have several downstream effects favorable to adversarial interests.

- Creating political discord in the US

- Distracting populations from more pressing societal concerns

- Forming fraudulent "in group" cohesion

- Sowing distrust in professionalized media

This can be observed in the inflammatory, click-baity, politicized content being distributed. Did Karoline Leavitt really go to her high school and raise funds for the retirement of her beloved janitor? Certainly not. A story like this seeks to confirm the biases of right leaning people looking to share material that further validates their support for the president.

This deeper entrenches political divides, as well as fractures an American sense of truth and reality. This, I don't think it is a stretch to say, bolsters the super powers that oppose us by making the American population slower, dumber, and so profoundly aggravated that nothing meaningful ever gets done.

Data harvesting

Even without accepting site permissions or consenting to cookies, a useful batch of your data is tracked by practically every site you visit, especially when you're using Chrome.

Sites like my example may be parts of a pipeline that allows them to use your data in ways you may not appreciate. Even when the sites are tracking you without any personally identifiable information, they can be using reports of traffic sources and IP addresses to sell information to other brokers to then further target you and people like you.

How you can spot it

Whenever I find a page that I am skeptical of for whatever reason, I scroll all the way to the bottom to try to find links to a Privacy Policy or Terms of Use. A legitimate webcrawler like Google will often downrank you for failing to have these pages, especially if you are (or are masquerading as) a news site. So scam/spam sites will often put fake Privacy Policy or Terms of Use pages up with generic language.

On a real Privacy Policy or Terms of Use page, you will see a lot of legalese and disclosures about data collection. But what you are looking for is an address and contact information. European and US State laws require data collectors to provide this information for users.

Let's take a look at the contact information for "viralstoryus.noithatnhaxinhbacgiang.com"

Yes, that number is literally five five five, one two three, four five six seven.

And when you go to that address in Google Maps you'll see a Truist bank in a supermarket parking lot.

These are immediate and significant hints that the site you are dealing with is not a serious outlet.

The AI of it all

Gurgling right under all of this is a foreboding slurry of AI nightmares. Let's talk about two ways in which AI is being implemented to spam Facebook, and what it could mean for the future.

First, of course, there's the AI generated content. Scrolling on my baby burner Facebook feed, the vast majority of media I encounter is AI generated in some way. I have seen dozens of non-consensual suggestive images of celebrities. I have seen entire lies about history made to look legitimate with photorealistic AI images. Whole articles, about things that have or have not happened, are snapped into existence.

For any functioning speech platform, this is an existential, extinction level event. The ways in which this much slop is bad for society cannot be underrated, but it's also just horrible business for Facebook, who rely on real human eyeballs enjoying the time they spend on the site.

Speaking of real human eyeballs, the second way AI is being implemented is in the form of bots. These bots will fabricate attention for all types of things, commenting, liking, friending, doing all the things real people do on Facebook. The flywheel is extremely easy to exploit: Generate incendiary Facebook content, unleash a horde of bots to say insane shit in the comments, watch as engagement on your post increases, watch as clickthrough to your data mining/SEO scam/disinformation site sky rockets. Pay for more AI and bots and do it all over again.

I believe that Facebook literally cannot survive this. Their entire business model relies on the attention and clickthrough of real human beings with real human money. While they may be able to retain the biggest users of the platform, I believe that regular people will begin to avoid a platform filled less with their friends and family and more with AI videos of Elsa from Frozen playing tennis or fetish images of feet. I could be wrong, but Facebook is playing with fire here, and risks losing their money printer without rigorous oversight and content moderation.

Why Facebook won't do anything

As I've written before, Facebook came into 2025 eager to do away with their existing content moderation apparatus.

But there are other reasons they may be disinclined to really come down hard on this rapidly proliferating behavior.

For one, Facebook's parent company Meta is in the AI business. The very same spam being generated on their site could have come from their own models. They may be wary of taking a strident position against certain uses of AI because they have a vested interest in the AI market. Skepticism for one use of AI may permeate to other uses, potentially disrupting future income streams they hope their AI services will provide.

Additionally, for the moment there are still humans on Facebook. The algorithm will continue to serve up the most delicious, sugary content to those people. The Ouroboros has the tail in its sights, but hasn't chomped down yet.

To close out, I hope that exploring some of this obviously fraudulent material will help you examine other content you may interact with, even the real stuff. The characteristics of the spam - the clickbaity titles, the gripping imagery, the incendiary comments - are only being exploited because spammers know that these things game the system. Many media outlets, even the reputable, professionalized ones, use these same tactics daily to keep you engaged.

Perhaps it is wishful thinking, but I don't believe that this is ultimately what people want out of their online experiences. I think that right now there are not a lot of viable alternatives, especially if you want to connect with people you know, but I can see the possible future where this all goes away. I hope you're able to see it too.