It's Probably Broken and I Don't Believe You

Content warning: This post includes a story about a teen suicide.

"It's probably broken and I don't believe you" is a common catchphrase of Nilay Patel, the editor in chief of The Verge. The phrase is deployed most frequently on The Vergecast when talking about "groundbreaking" new AI stuff. After companies march out glitzy debuts of their AI products, they like to tell us how innovative, necessary, and life changing they will be.

But for right now, lots of companies are not delivering on the transformative promise of their products. Billions (and billions) (and billions) of dollars are being poured into various AI upstarts without much evidence that what they are creating is useful or worthwhile.

For today's Warp, I want to highlight a few stories of grave AI failures to give you an honest counterbalance to the AI evangelism we see on earnings calls, product debuts, and advertising. All three of these stories are from just the last few days!

OpenAI's transcription tool Whisper is a total mess. While the company insists the tool should not be used in "high-risk domains," they have not gone out of their way to restrict access to it. In this particular story, researchers were able to find massive amounts of "hallucinations" in medical recordings.

In an example they uncovered, a speaker said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.”

But the transcription software added: “He took a big piece of a cross, a teeny, small piece ... I’m sure he didn’t have a terror knife so he killed a number of people.”

A speaker in another recording described “two other girls and one lady.” Whisper invented extra commentary on race, adding “two other girls and one lady, um, which were Black.”

In a third transcription, Whisper invented a non-existent medication called “hyperactivated antibiotics.”

In fairness, I see the usefulness of transcribing medical visits. It obviously brings up a number of privacy concerns, but I think it's a good thing if more people can access exactly what was said in potentially high-stakes conversations regarding ones health. But the entire idea becomes useless when the thing listening just starts inventing things that were never said. There are existing transcription services that do not do this!

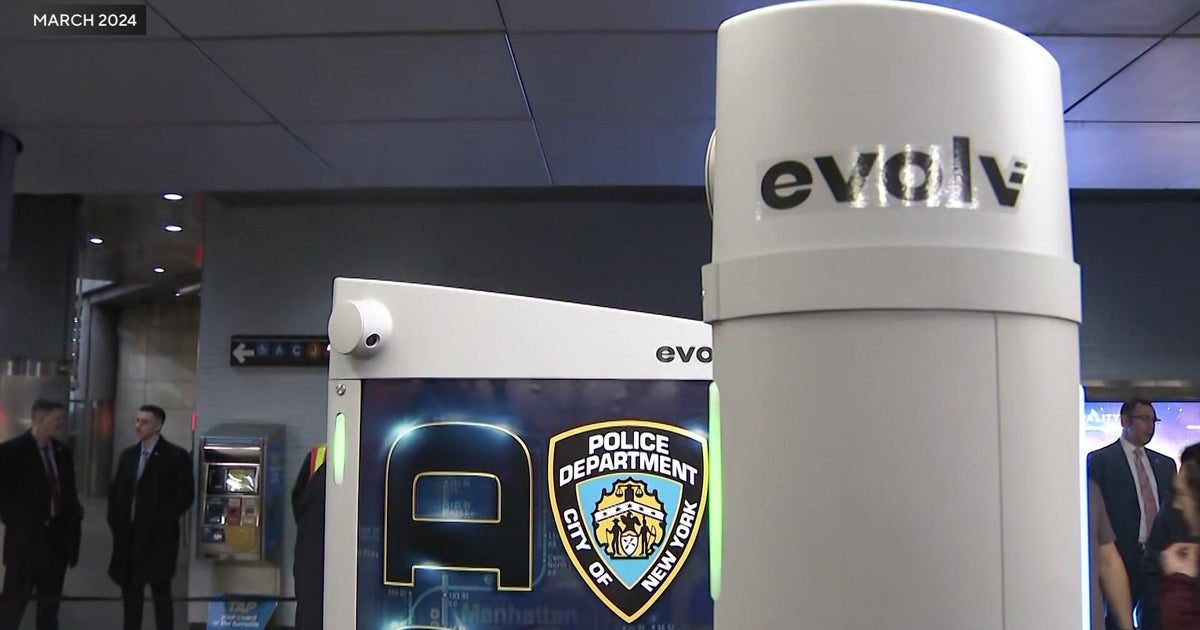

Surveillance is bad. That's a core belief of this newsletter. But surveillance that doesn't even work? Not only bad, but an incredible waste of money.

This year, the laughable and corrupt Adams administration put a bunch of AI gun and weapon detectors in key spots around the city. The company that made them, Evolv, suggested that through the use of sensors, video, and predictive software they could identify concealed guns and other weapons being carried around the subways. The only problem is that they don't work. Like, they don't work at all.

According to the NYPD, some 2,749 scans were done during the pilot program. There were 118 false positives, the NYPD said. Twelve knives were recovered. No guns were recovered.

Zero guns! Not a one! 12 knives and 118 false positives! Of those 12 knives, zero arrests were made, implying that 0 of those 12 were possessed illegally.

So 118 people were stopped unnecessarily, a big chunk of the city's budget didn't go to something useful, and the AI part of this didn't even work!

This final story is incredibly sad. Here's a little bit of context.

One use case of AI chatbots is to have them role play as different characters. This is the foundational product of the company character.ai. Character.ai offers a wide variety of AI chatbots that will interact with users as if they are "real" people. A quick glance at the site will show you that these chatbots often take on the personae of animated characters or cultural archetypes, which can be a draw for younger audiences.

You can read the excellent reporting that Kevin Roose at the New York Times did above, but I want to highlight one of the failures of not just character.ai, but technology companies everywhere.

These companies do not tend to prioritize safety, even when they know their user base may skew younger. They prioritize new features, innovative ideas, and a kind of "move fast and break things" mentality that has remained popular amongst Silicon Valley companies. This is extraordinarily irresponsible, especially when deploying AI that may act in unpredictable ways.

Our government has a number of regulations that are meant to protect consumers. Our food industry, our automotive industry, our early childhood industry; all of them are required to meet certain standards of local, state, and/or federal regulation in order to operate. While twenty years ago it may have been preposterous to think of media or software technology companies as requiring this kind of regulation, recent history has provided more than enough evidence that we need oversight of these companies.

If AI and technology companies are going to be responsible stewards of innovation, we must collectively hold them accountable. My heart breaks for this boy and his mother. This should never have happened.

We are being bombarded with messaging that AI is here to fix our problems. I get a number of ads each day promising that Watsonx intelligence will open up new insights for me and my business, or whatever. I am sure that it will. But alongside this AI evangelism, we must remain present and skeptical of these new developments. We cannot have the good and ignore the bad.